This year’s APJC leg of Cisco Live drew an eager crowd of thousands to Melbourne to hear about Cisco’s latest innovations. As always, there was an incredible buzz in the expo hall and the 4-day agenda was packed with announcements, leaving no doubt about the AI-powered direction the industry is heading.

Here are the standout announcements that captured my attention:

First up, and deservedly so, is HyperFabric – a prime example of Cisco using a single solution to address two major data centre challenges – cabling complexity and the configuration of advanced network architectures.

I recently had the opportunity to meet with Cisco’s product lead and experience the end-to-end process of designing and deploying switches with HyperFabric. I can confidently say this platform would have saved me countless weeks – if not months – of stress back in my engineering days!

Cabling is a common pain point in data centres, and I didn’t expect Cisco to tackle it, but they have. With an easy click-and-drag interface to design your data centre switch fabric, HyperFabric generates the necessary cabling plan and highlights any errors as you connect the switches. Even junior engineers will be able to instantly identify and fix misconnected cables.

Once everything is correctly connected, switches are automatically configured into stacks, and connectivity is optimised. Cable diagrams are auto-generated, making patching straightforward for anyone, and eliminating many hours of troubleshooting.

Even better, Cisco has embedded much of the value of its Application Centric Infrastructure (ACI) software fabric into an AI-driven workflow. While engineers still assign traditional VLANs through an intuitive graphical interface, HyperFabric works behind the scenes to construct a robust fabric switching architecture.

This architecture sets the stage for upcoming enhancements such as micro-segmentation and identity-based access. While customers appreciated the promise of Cisco ACI, many customers found its complexity daunting due to the skills and resources required. HyperFabric simplifies this entirely, creating a foundation for data centre networks that are more secure, higher performing, and far easier to manage.

Innovative solutions can redefine the game, and the newly launched HyperShield is no exception. With organisations navigating emerging vulnerabilities, tighter patch windows, and complex change management, it’s no surprise that Cisco’s HyperShield has quickly gained the attention of leading players in finance, retail, and government sectors.

HyperShield’s standout feature is its ability to embed traffic filtering directly into the network fabric, potentially reducing or eliminating the reliance on standalone firewalls. For example, in a scenario where a critical vulnerability emerges, HyperShield allows organisations to mitigate threats by leveraging server firmware and enhanced switch capabilities, even before a patch can be deployed.

For those wary of network updates, HyperShield’s dual data plane provides peace of mind. It enables automated testing and seamless upgrades while ensuring any unforeseen issues can be rolled back without disruption. By simplifying these traditionally risky processes, HyperShield helps organisations maintain uptime while staying secure.

In essence, HyperShield will give your organisation more proactive threat mitigation, streamline updates, and provide a network architecture designed to adapt to modern security challenges.

This was an update many have been waiting for – how Cisco will be integrating Splunk into its portfolio. This integration combines Splunk’s powerful data analytics and security tools with Cisco’s networking and telemetry expertise, giving customers a unified view of their networks, applications, and security systems.

With Splunk’s analytics combined with Cisco’s data and telemetry, organisations can detect and resolve issues significantly faster – sometimes in minutes rather than hours or days. Machine learning further enhances this by predicting potential problems before they occur, reducing downtime and improving the overall user experience.

Cisco has further enhanced its security offerings by integrating Splunk with its Extended Detection and Response (XDR) platform. In this case, it delivers advanced threat detection and faster response times, bolstering overall security. Additionally, the integration of Splunk with Cisco ThousandEyes enhances observability across networks and applications, providing organisations with comprehensive insights into network performance and user experience. In short, customers will experience faster and more effective issue resolution, even in complex environments.

Cisco and Splunk have clearly shown the power of a strong strategic partnership, combining technologies to deliver a more robust data platform that converts raw information into practical, data-driven insights.

As people return to offices, the need to seamlessly merge the digital and physical worlds has become increasingly relevant. Cisco Spaces isn’t new, but with Jeetu Patel now driving Cisco’s product strategy, integrations seem to be happening at an impressive pace.

Given Cisco’s huge range of video devices, handsets, wireless access points, and environmental monitors, they’re effectively one of the biggest sensor companies on the planet. Cisco Spaces has taken this to the next level by adding digital intelligence to physical workplaces.

From what I’ve seen, facility managers can really leverage this data to fine-tune office layouts, keep an eye on occupancy levels, and even save on energy costs by tweaking lighting and temperature based on actual usage. Employees and visitors get perks like indoor navigation, alerts when a workspace frees up, and friction-free setups for meetings and collaborations.

Safety and well-being are also front and centre. Cisco Spaces monitors factors such as air quality and noise levels, which goes a long way toward making the office a more pleasant place to be. This focus on blending operational efficiency with user comfort is perhaps what truly differentiates Cisco Spaces from other platforms.

By bridging the gap between physical spaces and digital insights, Cisco Spaces is again transforming workplaces into environments that boost productivity, collaboration, and employee comfort.

What struck me most at Cisco Live this year wasn’t just the technology itself— although it was, at times, quite impressive. Rather, it was the way Cisco is tackling real-life problems with simplicity and pragmatism. Whether it’s making data centres less painful to manage, securing networks without adding complexity, or turning office buildings into intelligent, responsive environments, the focus was squarely on technology that fits into how people and businesses actually operate. It’s an exciting time to be part of this industry!

If you want to know more about Cisco’s AI advancements, check out our Security Minutes videos with Cisco – AI Edition. Richard Dornhart, Data#3’s Security Practice Manager, sits down with Carl Solder, Cisco’s Chief Technology Officer ANZ, to discuss the role of AI in cyber security – from Cisco’s latest AI-powered solutions to the challenges of adversarial AI.

Acquisitions inevitably bring change. And Broadcom’s acquisition of VMware – a leading global provider of on-premises virtualisation solutions, has certainly shaken things up.

Since acquiring VMware, Broadcom has restructured its business, spinning off units like Carbon Black (security) and Horizon (desktop virtualisation). It has shifted the remaining products from perpetual to subscription-only models and reduced the total number of licensable products by bundling them.

While this bundling simplifies procurement, it has led to higher costs if the included products aren’t all relevant to your needs. The shift to subscription licensing has resulted in noticeable increases in renewal prices for some – with some businesses experiencing hikes of 100%, 300%, or even 500%.

These price uplifts are presenting an interesting opportunity with many reconsidering their infrastructure strategies and assessing competing technologies. Instead of continuing with VMware on-premises, they’re exploring alternatives, such as switching to different hypervisors (e.g., Azure Stack HCI, Nutanix) or migrating to the cloud.

If, like many, you’re considering other options, you have no doubt already considered one, or all of the below:

Microsoft’s Azure VMware Solution (AVS) offers some elegant answers to these considerations, addressing interoperability, flexibility, ease of integration and, perhaps surprisingly, cost.

AVS offers a comprehensive, hosted VMware service on dedicated Azure infrastructure. Jointly engineered by VMware and Microsoft, AVS is built and managed by Microsoft – recently named a Leader in 2024 Gartner® Magic Quadrant™ for Strategic Cloud Platform Services (SCPS) – with flexible pricing models that make it an attractive alternative to traditional on-premises setups.

Let’s explore AVS in more detail, starting with its portfolio of Software-Defined Data Center (SDDC) clusters:

AVS is deployed in clusters with a minimum of three hosts, including both storage and networking. It provides a quick, seamless path to the cloud for businesses running multiple VMware virtual machines. Use cases range from datacentre expansion, reduction, or retirement to disaster recovery, business continuity, and application modernisation. Its flexible disaster recovery options also support seamless integration from on-premises to AVS, between AVS clusters, or directly to Azure services.

AVS is particularly appealing to businesses already considering a cloud journey but not yet ready to modernise their entire application stack. After all, re-factoring or modernising applications during migration can be time-consuming and costly.

By comparison, AVS offers a ‘migrate to modernise’ approach, enabling businesses to migrate first, realise immediate cost savings that can be diverted to fund their application modernisation, and then gradually modernise. Once on Azure, companies can tap into a range of native services to accelerate application development.

AVS can integrate with your on-premises vSphere environment. But perhaps the biggest advantage of AVS is its seamless integration within the Azure ecosystem. Businesses can continue running their virtual machines on VMware while gradually modernising applications on Azure, all within a connected environment. By incorporating Azure services for security, backup, and monitoring, AVS effectively streamlines operations and reduces the need for on-premises servers, storage, and hardware. Given these services often make up a large share of compute resources, this approach can significantly lower capacity requirements. With less IT overhead, teams can focus on higher-value activities, easing the shift from traditional VMware setups by allowing businesses to re-group and migrate workloads at their own pace.

Migration from on-premises VMware to hosted VMware on Azure is seamless, with minimal disruption. AVS uses the same VMware virtualisation platform, allowing workloads to move easily and quickly without the need for refactoring. With HCX vMotion technology, you can extend your on-premises VMware networks to AVS, enabling virtual machines to migrate without reallocating IP addresses.

Another significant benefit of AVS is that it eliminates the need to re-skill employees or introduce new tools. Businesses can continue using the same skills and personnel that manage their on-premises VMware environment. IT administrators access familiar tools for configuration and management, reducing the learning curve and maintaining the level of control they have come to expect with VMware.

When you migrate your VMware with AVS, Microsoft serves as your single point of contact for all issues. Furthermore, if VMware’s involvement is needed, Microsoft manages that on your behalf. Microsoft also handles the underlying platform and infrastructure, freeing up your operational staff to focus on higher-value activities and reducing overall support demands.

Microsoft’s scale and negotiating power means they can offer VMware licensing at very favourable prices compared to on-premises VMware. AVS offers flexible pricing options, allowing customers to choose pay-per-hour usage or reserve capacity for one, three, or five years. Instead of investing in and managing their own servers, businesses can lease VMware environments from Microsoft, benefiting from predictable and affordable pricing. Customers can also lock in discounted rates for longer terms, with reserved instances that can convert to Azure IaaS.

AVS offers a compelling combination of cost savings, reduced management overhead, and seamless migration, making it an ideal choice for VMware customers looking to modernise at their own pace.

As always, a thorough evaluation and total cost of ownership (TCO) analysis are essential to determine the best-fit solution – whether that’s AVS or an alternative approach. Some potential limitations of migrating to AVS include:

Data#3 can help determine if AVS is the right fit for your business through a comprehensive solution assessment involving:

Your organisation may be eligible to access Microsoft funding to undertake this assessment, providing a risk-free way for you to explore the benefits of migrating to AVS. Contact our team using the form below to find out if you qualify.

Click here to read how a Government department cut costs and future-proofed their operations with a Azure VMware Solution from Data#3.

Data#3 brings extensive experience in delivering datacentre solutions, from procurement and deployment to seamless migration. As a leading Microsoft cloud provider and Azure Expert Managed Service Provider (MSP), Data#3 leverages its deep partnership with both Broadcom and Microsoft to offer clients robust, integrated solutions that simplify and optimise their IT infrastructure.

A new device without some variety of embedded AI barely qualifies as a product launch these days. At least the team behind Microsoft Surface thinks so because the latest Surface Copilot+ devices to hit the shelves come packed with AI-driven capabilities. But before we look at the devices themselves, we need to talk a little about the new processors.

First integrated into Surface devices with the release of Windows 11 in 2023, Copilot was part of Microsoft’s broader push to embed AI-powered tools directly into their ecosystem, starting with the Office suite and expanding into Windows.

But in the AI era, that’s old news. This latest round of Surface devices has AI capabilities right down to the core, or rather, in their new ARM-based processors and Neural Processing Units (NPUs). It’s what Microsoft calls “high-octane AI acceleration”.

The ARM chips powering these devices (Qualcomm Snapdragon®️ X Elite and Plus processors) represent a significant change from traditional Intel-based chips. Thanks to their Reduced Instruction Set Computing (RISC) design, ARM chips are faster and more energy-efficient than x86 processors from Intel and AMD.

This has led to around 99% of the premium smartphone market being powered by ARM*. However, compatibility issues with legacy software and hardware have prevented ARM from gaining widespread adoption in the desktop and laptop market. This gap is narrowing, though, as ARM-native applications and app-emulation tools advance. Microsoft is undoubtedly betting on ARM’s success, buoyed by Qualcomm’s benchmarks, which have surpassed the performance of both Apple’s M2 and Intel’s Core Ultra 7 chips, which leads us back to the launch of these two new devices.

A powerful combination of AI and processing power makes the Surface Laptop 7th Edition (available in 13.8-inch and 15-inch versions) an excellent choice for enterprises looking to boost productivity and enhance collaboration.

Processing power | Features robust on-device AI capabilities, thanks to the Snapdragon®️ X Elite and Plus processors (86% faster than Surface Laptop 5!) and integrated NPU.

Battery life and portability | The mind-boggling 22 hours of battery life on the 15-inch and 20 hours on the 13.8-inch (based on local video playback use) ensure uninterrupted performance, while fast charging ensures quick re-fuelling, so users stay in flow.

Connectivity | Featuring Wi-Fi 7 along with dual USB-C /USB 4 ports, allowing you to connect multiple peripherals or external displays.

Display | Near-edgeless 15-inch and 13.8-inch PixelSense Flow touchscreens feature adaptive colour, contrast, and HDR for a bright and responsive display under dim or bright lights.

Keyboard and touchpad | The laptop includes an improved silent keyboard experience with a dedicated Copilot key for quick access to AI-powered Copilot features. It also has a large haptic precision touchpad.

Camera | The HD 1080p Surface Studio Camera, combined with Windows Studio Effects driven by AI, gives virtual meetings a professional look and feel, while AI-driven features like background blur and voice clarity make communications clear.

Sustainability | Made from a minimum of 67.2% recycled materials, this device will appeal to environmentally conscious businesses.

A versatile tablet-to-laptop ARM-based device with unbeatable upgrades in processing power, AI capabilities, and enterprise-ready features, including security and repairability.

Processing power | Features robust on-device AI capabilities, thanks to the Snapdragon®️ X Elite and Plus processors (86% faster than Surface Laptop 5!) and integrated NPU.

Battery life and portability | ARM processors consume less power, so it’s no surprise users can expect up to 14 hours of battery life for Wi-Fi-only models. This is fantastic news for employees who work remotely, in the field, or have travel-heavy roles. And if power runs low, there’s fast charging to get those bars up quickly.

Connectivity | With Wi-Fi 7 and 5G support, users get faster, more reliable connectivity along with NFC for secure authentication, which is essential for remote work, video conferencing, and accessing cloud apps. The dual USB-C / USB 4 allows multiple peripherals or external displays to be connected, allowing you to configure your workspace to best suit your needs.

Display: Optional 13″ OLED display with HDR technology, adaptive colour, and high contrast and brightness for a clear viewing experience in any light conditions.

Keyboard and Pen | Users will enjoy a satisfyingly silent typing experience with the Surface Pro Flex detachable keyboard with Precision Haptic touchpad and Surface Slim Pen – which handily charges right on the keyboard.

Camera | Video conferencing is a breeze with an ultrawide camera paired with AI-driven enhancements through Windows Studio Effects.

Sustainability | Align with your organisation’s environmental responsibility. Pro 11th Edition uses at least 72% recycled materials in the enclosure.

On-device AI features | Beyond the handy Copilot key, both devices share a few notable on-device AI capabilities driven by the NPU.

Repairability | Like other Surface devices, both editions have Microsoft’s simplified repair process with replaceable components so they can be quickly repaired and returned to service.

Security | Advanced security measures like Windows Hello plus Microsoft Pluton technology which provides chip-to-cloud protection. The ability to manage risks directly from the device adds an extra layer of trust.

It’s important to note that these devices are not generational replacements for the respective Surface 6th and 10th editions; they are available in parallel. This is because they’re fundamentally consumer-focused devices, but their specs and affordability still make them suitable for specific roles in the enterprise. While features like the ability to transform simple sketches into refined designs add appeal, it is creative and AI-driven environments that will realise the device’s actual value, making them more suited for companies looking to develop specialised applications tailored to their needs rather than for general enterprise use.

To fully unlock the AI-powered capabilities of these devices, it’s important to understand the difference between the free, public version of Copilot and the licensed enterprise version. The free version offers basic functionalities, like web searches, while the licensed enterprise version provides advanced features, such as internal resource searching within company documents and databases. You will need the licensed version to access these deeper, business-critical AI features and get the most out of these devices. Data#3’s Copilot for M365 Readiness Assessment helps you prepare for deployment so that you’re ready to leverage its full potential.

Another essential consideration is remembering that these are ARM-based devices, not Intel. This may have incompatibility repercussions for businesses that rely on specific networking, management and security software, device drivers and legacy applications built for intel-based devices.

If you’re considering adding either of these devices to your fleet, you must assess your current infrastructure for compatibility. Our ARM readiness assessment evaluates your hardware, software and peripherals to determine whether your current systems can support ARM-based devices. For example, you will need Windows 11 as part of your SOE. Our assessment will give you a clear picture of the potential adjustments or upgrades required to integrate these ARM devices into your workflow fully.

Learn more about Data#3’s ARM readiness assessment >

In this new Surface lineup, Microsoft is betting on the future of AI-accelerated computing with ARM, positioning these devices as forward-thinking choices for businesses that are going all-in on AI technologies.

But take note: these devices are primarily aimed at organisations that have already—or plan to—adopt AI-powered solutions and tools within their operations.

This makes these devices an ideal fit if:

Your users value the ability to perform on-device AI tasks without relying on the internet

You want to introduce and test devices that are able to support emerging AI trends

Your company wants to explore custom-built AI models tailored to specific tasks

However, businesses must assess their environment for compatibility, especially regarding device drivers and app readiness. You can also test drive these devices by requesting a trial unit, and experience their AI-powered features first hand.

With over 15 years’ experience improving device fulfilment and implementation for our clients Data#3 will help you end the frustrations associated with device sourcing, deployment and warranties. We even offer training and adoption services to upskill your people on their new devices, to ensure the greatest return from your investment.

We were also recently named Microsoft Surface PC Reseller Worldwide Partner of the Year, Microsoft Surface Reseller of the Year for Asia, and the Microsoft Surface+ Worldwide Partner of the Year.

This isn’t another “what is zero-trust” article – I think we can all agree that we’ve moved beyond that as we know it isn’t a product, it’s not a replacement for firewalls or VPNs, and it’s not something you do and then move on.

However, it is essential and appears in some form on virtually every government department’s cybersecurity strategic plan. Some departments and agencies have made progress and implemented elements of the zero-trust model within their environment, but not at a broad enough level to provide the promised levels of protection. Hence, despite the plan, they’re still vulnerable to a cyber-attack.

If zero trust is essential and part of a plan, why are government departments and agencies struggling to implement it? This post will explore that question.

The imperative to adopt zero-trust security has never been clearer for government departments and agencies. In an era of remote work, cloud-based services, and increasingly sophisticated cyber threats, zero-trust is an additional, identity-based layer that reduces the reliance on increasingly ineffective perimeter defences.

Driven by mandates from federal, state, and local authorities (such as the new Cyber Security Bill 2024), and the recognition that a new security model is needed, government entities are eager to embrace the principles – but reality on the ground tells a different story. Despite the strategic importance of zero trust, many government entities are struggling to turn that vision into tangible action for a number of reasons.

As a result, many government organisations find themselves stuck in a paradoxical situation. They know zero trust is where we all need to be, but the path remains elusive. Instead of bold action, their security roadmaps remain tactical and address the next pressing need rather than being a strategic, long-term plan that is continuously checked and aligned to.

In a recent discussion with a financial industry CISO, they revealed that these pitfalls are all too common. Despite an acknowledgement of the need for zero trust in their cybersecurity plan, and a multi-million dollar investment, they also:

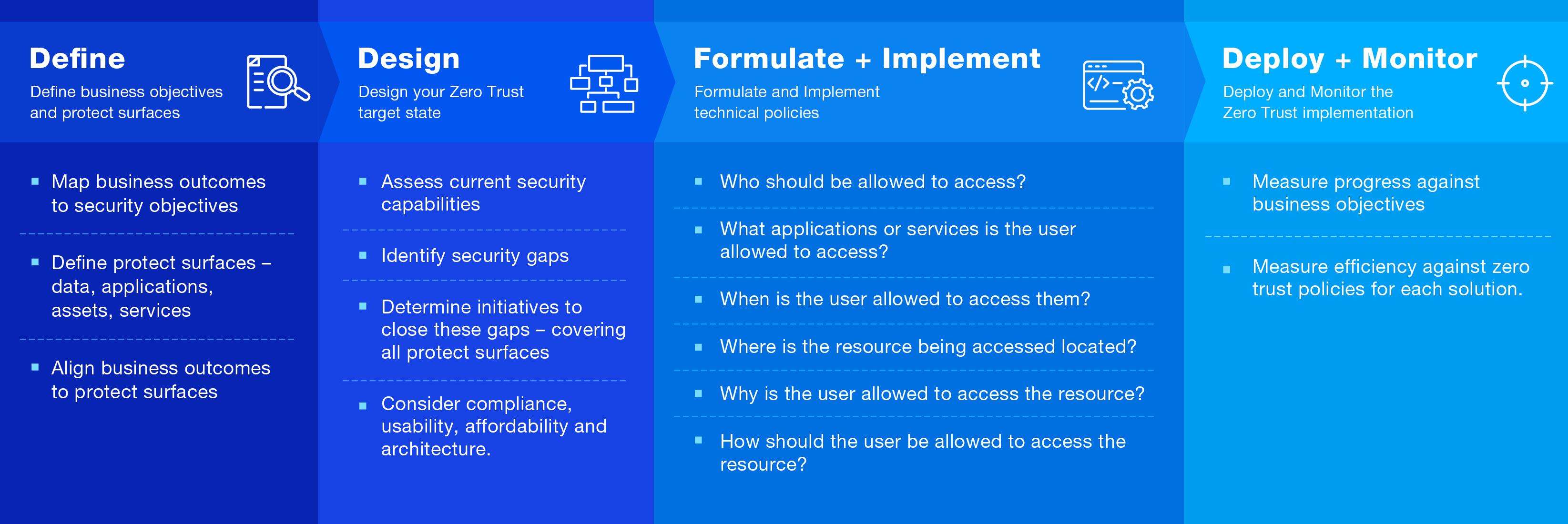

Breaking free of this paradox requires a fundamental shift in mindset and approach. Rather than viewing zero trust as a product- or tool-based, all-or-nothing proposition, government agencies must embrace a more strategic, process-driven incremental path forward. They can chart a course towards zero trust success by focusing on their most critical assets, prioritising use cases, and partnering with experienced advisors who take this process-driven approach.

Without trivialising the difficulties of implementing zero trust, there are some principles to consider:

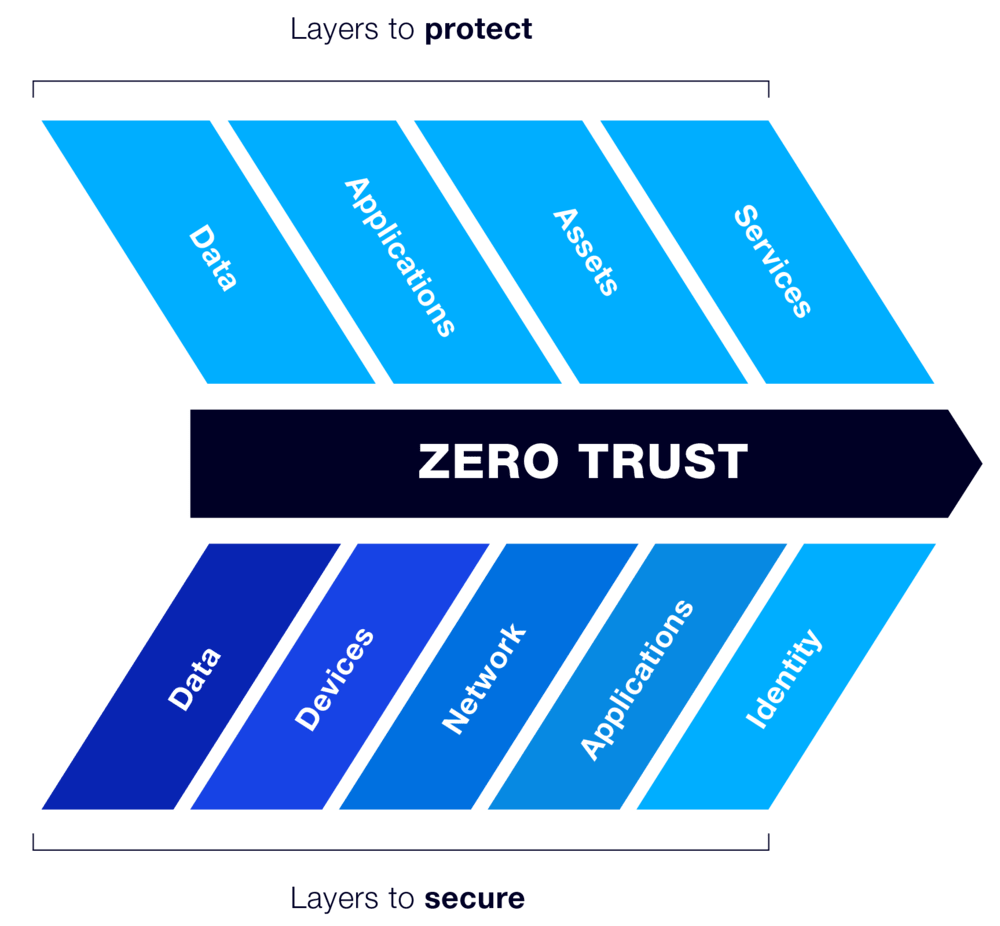

Do this by conducting an audit to pinpoint the most sensitive data, applications, and systems requiring the highest level of protection. Then, identify your Protect Surface DAAS elements – Data, Applications, Assets, Services.

Do this by conducting an audit to pinpoint the most sensitive data, applications, and systems requiring the highest level of protection. Then, identify your Protect Surface DAAS elements – Data, Applications, Assets, Services.

While tools and solutions are a component of the zero-trust model, they too often become the focus of government security teams looking for tangible ways to move forward. While tools can provide valuable data points, implementing zero trust effectively requires a more holistic, process-driven approach. Simply relying on a tool to assess one’s zero-trust posture is insufficient.

That’s why working with experienced advisors like Data#3 and Business Aspect, who can guide you through a comprehensive readiness assessment and the development of a practical zero-trust roadmap, is critical. This process-oriented approach, rather than a tool-centric one, can ensure that government entities have a clear understanding of their current state, their priorities, and the steps needed to achieve their zero trust goals.

This includes:

The final factor is understanding the vendor landscape. Vendor solutions are a critical implementation component, and aligning the right vendor solution is easier for a partner like Data#3, with its extensive vendor relationships and accreditations.

For example, government entities that have made significant investments in Cisco networking could use Data#3’s 25+ year relationship with Cisco to access their extensive security portfolio and zero-trust capabilities.

Implementing zero trust is a marathon, not a sprint. Government entities can chart a course toward a more secure, adaptable, and future-proof security architecture by taking a phased, strategic approach—identifying critical assets, assessing current capabilities, and partnering with experienced advisors. If you would like to discuss further please reach out to me using the contact button below or contact your account manager.

Data#3, in partnership with Cisco, will be hosting Security Resilience Assessment Workshops in 2025. These workshops will guide you through a self-assessment of your security posture using the updated CISA Zero Trust Model.

Register your details below to receive an invitation.

In today’s digital age, data security and regulatory compliance are essential for businesses across industries. Protecting sensitive information, while ensuring that communication within an organisation aligns with compliance standards, can be challenging. This is especially the case for those operating in highly regulated sectors like finance, healthcare, legal services and government agencies.

Microsoft’s Information Barriers (IB) in Microsoft 365 offers a robust solution to these challenges, providing organisations with the tools to prevent unauthorised communication and ensure compliance with industry regulations. This blog will explore what Information Barriers are, how they work, and why they are crucial for businesses in today’s regulatory landscape.

Information Barriers (IB) are a set of controls within Microsoft 365 that allow organisations to block or restrict communication between specific groups or individuals by segmenting groups of users or departments. These barriers are particularly useful in scenarios where there is a requirement to prevent potential conflicts of interest, safeguard sensitive data, or comply with regulatory requirements.

Common scenarios include:

Education: Students in one school aren’t able to look up contact details for students at other schools.

Legal: Maintaining the confidentiality of data that is obtained by the lawyer of one client and preventing it from being accessed by a lawyer for the same firm who represents a different client. Government: Information access and control are limited across departments and groups.

Professional services: A group of people in a company is only able to chat with a client or a specific customer via guest access during a customer engagement.

Microsoft Information Barriers are designed to control the flow of information within an organisation by limiting who can communicate with whom. Microsoft 365 admins can set up these barriers to block communications, including Exchange online emails, Teams collaborations, chats, Sharepoint online and OneDrive for business.

Key features include:

For example, a financial firm might restrict communication between its investment research team and its sales team to avoid conflicts of interest. Once an Information Barrier policy is in place, these two teams wouldn’t be able to communicate or share information with each other via Microsoft Teams, Exchange, or SharePoint, ensuring compliance with regulatory guidelines.

The benefits of Information Barriers extend beyond compliance with regulatory requirements. They provide a holistic approach to safeguarding sensitive information and controlling internal communications, while also improving organisational efficiency and collaboration.

Implementing Information Barriers in Microsoft 365 requires careful planning to ensure alignment with your organisation’s needs, regulatory compliance and minimal disruption.

Data#3’s Microsoft experts will guide you from design to seamless implementation, applying best practices to meet your compliance and security requirements. We also provide documentation and training to ensure ongoing policy management.

Protect sensitive data, control internal communications and stay compliant with Microsoft’s Information Barriers. Speak to a Microsoft Security Specialist today to enhance your Microsoft 365 environment.

The introduction of artificial intelligence (AI) into our professional lives is not just a technological upgrade – it’s a cultural revolution. Adoption of Copilot for Microsoft 365 (M365) is rapidly changing what the typical workday looks like. This change naturally brings about a lot of questions – including what exactly you should be asking your new AI tools for help with.

At Data#3, our Copilot for M365 Working Groups’ recent discussions have shed light on a pivotal shift in our work ethos. The group explored the concern that some individuals might feel reluctant to ask AI questions in areas where they believe they should already know the answers, or worry about how their inquiries might be perceived by their supervisors. After a lengthy discussion, the group concluded that asking even seemingly simple questions of AI should not only be acceptable – but encouraged.

The heart of this discussion revolved around the idea of a major cultural shift surrounding expertise, and what it means in the workplace. Using AI is not about abandoning our expertise – it’s about augmenting it. The integration of AI into the workplace provides an opportunity to validate what we already know, learn new things and continue pushing the boundaries of what we can achieve.

In one of our recent Copilot for M365 Working Group workshops, the Data#3 team considered how asking AI questions they already know the answer to might help them become more adept at harnessing the power of AI.

Steve Bedwell, our technical maestro, might ask, ‘What are the latest advancements in Copilot Studio?’ While Steve is well-versed in this subject, his interaction with Copilot serves as a springboard for generating a nuanced technical narrative, enriched by his own expertise.

On the business front, James Creighton, Practice Manager at Data#3 could pose the question, ‘How can we leverage emerging markets for growth?’ Although he is familiar with this topic, Copilot’s insights could help him craft a business strategy that’s both innovative and grounded in data-driven analysis, and significantly less time consuming than doing so manually. In this example, AI provides articulate content that can be moulded to what he needs, rather than creating it from scratch.

Deborah-Ann Allan, Principal Consultant, known for her sharp insights, might inquire, “What are effective change management strategies when deploying cutting-edge technology?” Through Copilot’s insights, she can utilise ideas that can be tailored to resonate with organisational culture.

Our master trainer in Modern Work technologies, Alice Antonsen, might ask, “How can we enhance remote collaboration through training?” With Copilot, she can quickly and efficiently draft comprehensive training materials, which she can then refine through her deep understanding of the subject.

These are just some brief examples of how you might generate value by prompting Copilot for M365 with a question you already know the answer to. In practice, you’ll likely need to follow your initial prompts with additional ones to refine the output. By continuing to ask questions of your AI tools, you can craft your output to a stage where you’re relatively happy with a first draft. After that, you bring your know-how and experience, your thoughts and your heart to review and manually add the finishing touches.

That’s why it’s critical for organisations to make time for conversations like these. As we continue to navigate this cultural shift, it’s essential to recognise that asking AI for assistance is not a sign of incompetence – it’s a sign of strategic thinking. It’s also an acknowledgement that while we may know the answers, there’s always room for growth, refinement, and a fresh perspective.

As another example, we used Copilot for M365 to help draft this blog based on the transcript of an internal discussion. The initial draft made it easy to bring together several voices and provided a foundation to build on our team’s knowledge and language preferences—most importantly, in significantly less time. The ideas were ours, and the discussion took place between humans, but Copilot was able to create a well-structured blog once we provided clear directions on how to do so.

The journey we’re on at Data#3 is one of transformation, where AI becomes an integral part of our intellectual toolkit. It’s a journey that encourages curiosity, fosters innovation, and ultimately – we believe – leads to a more dynamic and empowered workforce. By embracing change with confidence, we can become more confident in using the power of our questions to unlock the full potential of AI in our workplace.

Interested in finding out more about how we can help you along your AI journey? Reach out to our team today.

From pioneering PDF technology to industry-leading creative platforms, Data#3 and Adobe help organisations embrace digitalisation and transform the way that work gets done.

With a relationship spanning 20 years, Data#3 has become Adobe’s largest Australian partner. It means we’re uniquely positioned to simplify licensing and help customers maximise their software investment.

AI is a topic that prompts a lot of opinions beyond the realms of the IT industry. From mainstream and specialist media to politicians and business leaders, a lot of voices are weighing in. There are the scare stories and dire warnings, the hyperbole and promises and, somewhere in between, the truth.

It is with this backdrop that Adobe AI Assistant has entered the fray. True to their creative roots, Adobe has approached both the challenges and potential of AI with their customary blend of design genius and out-of-left-field thinking. For anyone using Adobe products – yes, from Acrobat Reader upwards – the working day might be about to get a lot better.

Adobe’s strength lies in designing essential digital tools for anyone who wants to create, no matter if that’s pulling together a perfectly presented report or dreaming up an award-winning ad campaign. They opted to partner with Microsoft for the AI engine behind their offering, basing AI Assistant on Microsoft Azure OpenAI Service. It is both an acknowledgement that Microsoft is the best AI platform of the moment, and a choice to focus on delivering something uniquely Adobe that integrates beautifully into the world of their users.

There are some important differences to the way Adobe uses Microsoft Azure OpenAI Service compared to the Microsoft offering. This positions AI Assistant as complementary to Copilot for Microsoft 365 (M365), rather than in competition. When you query Copilot, it will source input from a vast ecosystem including M365 documents, the web, emails, and Teams chats. In contrast, AI Assistant sources information solely from files that you open in Acrobat. That information is not used to train AI Assistant or Microsoft, and what you create is only retained for 12 hours on the Adobe system.

We know from experience that the way change is managed is usually the deciding factor between resounding success and underwhelming results. Given the extraordinary shift that AI promises, it is no surprise that most technology-progressive organisations are in a phase of assessment and preparation for Copilot. As one of just a handful of Australian businesses selected to be part of Microsoft’s Copilot Early Access Program (EAP), we’ve been fortunate to get hands-on experience of the phenomenal capabilities it brings.

At the centre of this is understanding risk and preparing a safe introduction. All this takes a bit of time. Copilot for M365 preparedness assessments and plans have been keeping our consultants busy as they help customers understand the implications to their data, the opportunities unique to their businesses, and the elements to making their rollout a success. Because Copilot may harvest source documents from across your ecosystem, the right rules must be put in place to protect sensitive information.

Adobe’s AI Assistant is a far quicker and simpler decision, making it very quick and easy to put in place. It is a great way to get users started on AI while you prepare for Copilot for M365. The primary reason for this speed is that users are working with sources they provided, meaning they can reasonably be expected to have permission to access that information. Their sources are not used to train Adobe’s model, so whatever data they’re working with goes no further than their own piece of work.

Adoption might be one of the quickest decisions you make but that certainly doesn’t mean the effect will be trivial. How? The way you use AI Assistant will depend on your industry, your role, and what you’re trying to achieve. We’re seeing individual users find interesting applications for AI Assistant that relate to their own workflows.

From a user perspective, it is very easy to get started. Say, for example, someone wants to create a report based on a lengthy document. They can open the document in Acrobat, and upfront get a summary of the key insights in next to no time. The individual or business can then engage with their documents using natural language to significantly accelerate their time to knowledge. The user might add documents – in Word, PDF, even PowerPoint – that can lend extra information on their topic. They effectively have a conversation with AI Assistant as it guides them through the process.

One of the very handy features of AI Assistant is that it includes citations to the exact source of every bit of information, so it is simple for the user to check accuracy. This is designed to make it quicker to understand and work with documents, not to replace the human element.

Another feature coming soon is that when the user is happy with the content they have created, they can choose a template and their own brand logo to make the report look professional. Because it is designed to integrate seamlessly with Adobe Creative Cloud, users can also extend to create professional web pages, eye-catching social posts or just about anything, ready to publish in seconds.

In education, an admin might use AI Assistant to review multiple lengthy policy documents to create a summary and build a perfectly presented report, saving themselves hours or days of reading to summarise the main points. The faster time to understanding will give a lot more time to dedicate to the million other tasks they are likely juggling.

For government, whose path to Copilot for M365 is likely to be among the most lengthy due to the nature of the data in their ecosystems, AI Assistant is a chance to dip their toes in the AI water. When proposing policy, for example, a government user may typically wade through numerous documents and data sources, where trying to find the most relevant information is like the proverbial needle in a haystack. AI Assistant puts in the hard yards of hunting down those gems, so the user can work faster and put more focus into interpreting key points and presenting them in the most effective way. It is in this collaboration between human and AI where the magic happens.

Adobe has a few sample prompts you can play with if you want to get a quick look at what it can do. Just ask your Data#3 Account Manager, and that hundred-page report on your to-read list can be smashed out by lunchtime.

Interested in finding out more about how Data#3, in partnership with Adobe, can help your organisation make the most of the latest AI opportunities? If the answer is yes, get in touch with us today.

Many tools and templates are available to help develop a cyber incident response plan (CIRP). In this blog, we’ll outline a simplified approach to CIRP planning and explore how advanced extended detection and response (XDR) solutions can streamline your CIRP.

An organisation’s ability to respond appropriately to a cyber security incident is a critical capability that must be developed, maintained, and refined – you can’t just rely on your security infrastructure to do the job. Much like business continuity and disaster recovery, handling a critical incident requires a carefully considered plan. This plan should ensure the right actions are taken, along with the associated people, processes, and technology capabilities to support that plan. Incident response planning is an essential governance and process control within any cyber security framework. However, according to an ASIC Spotlight on Cyber Report, 33% of organisations still don’t have a CIRP despite the significant focus on cybersecurity in the media, and at an executive level.

An effective approach to developing a CIRP should draw on principles from industry standards such as ISACA, NIST, ISO27001/27002 and the Australian Government Cyber Security Operations Guidelines. It’s important to remember that the plan is an evolving document – not set and forget – and just one part of a best practice-aligned security operations environment. We’ve included best practices for incident response planning a little later in this blog.

In the first blog of this series, we discussed the challenges of breach detection and the XDR tools improving detection rates. The effectiveness of any XDR tool directly impacts your CIRP – i.e., if your XDR tool can accurately detect and respond to a breach, your CIRP should consider these initial steps to avoid unnecessary escalations. Built-in capabilities can also simplify CIRP development.

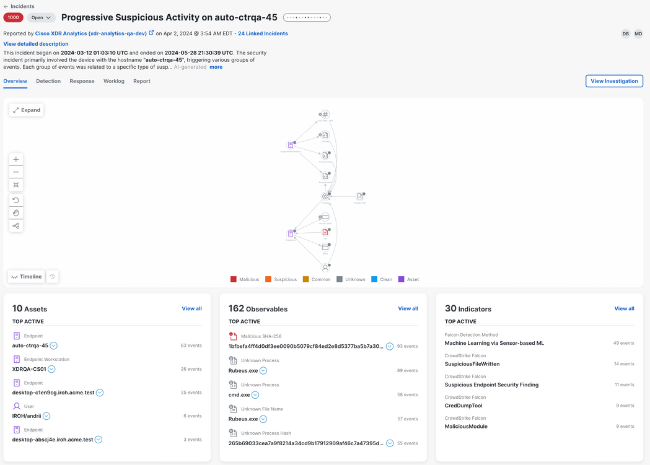

Using Cisco’s XDR solution as an example, incidents promoted from security events are listed and ranked based on a priority score calculated from:

This ensures the most critical detections are surfaced at the top of the list, allowing your team to focus on what matters most.

You can then drill down further into the incident detail and view an attack graph that displays a compacted relationship view of the events causing the incident, and the targeted devices, entities, and resources. Having this information easily accessible allows for a clear decision-making framework to be developed in your CIRP, outlining when to escalate further and when the incident has been contained and/or eradicated.

If further response action is required, Cisco XDR can manage your CIRP via playbooks that guide teams through incident response to effectively identify, contain, and eradicate the threat, and restore systems to recover from the threat.

These playbooks include tasks for all phases of incident response and the ability to document findings throughout the process. Some tasks also include workflows to automate parts of the response, and playbooks can be customised depending on the type of threat, e.g., ransomware vs data breach vs phishing. They can also be customised if needed according to your own CIRP, with the tasks and assignment rules developed for your organisation.

If we take a step back from the product-level capabilities of XDR, what should a best practice-aligned security operations environment look like? Many organisations define capabilities across five service areas – as per the European Union Agency for Cybersecurity (ENISA) whitepaper on CSIRT and SOC good practice:

Source: ENISA Good Practice Guide: How to set up CSIRT and SOC

Aligning your organisational program, accountabilities, and processes with these standards provides a robust framework for addressing security response capabilities while ensuring alignment with an organisation’s cyber security strategy.

At Business Aspect and Data#3, when we advise customers on developing or refining a CIRP, we break the planning process down into three broad phases which are adapted to our client’s environment and context while integrating and aligning with your existing organisational risk framework, processes, policies, and subsequent recovery processes. The plan should also integrate with existing processes including crisis management, disaster recovery, data restoration, business continuity, and key communications plans.

The CIRP typically includes:

The CIRP should also integrate with any detection methods associated with security operations processes and escalation paths. Good practice involves developing pragmatic incident response strategies that align with your organisation’s capabilities and culture. Notably, a key component of any CIRP is effective and appropriate communication both within and outside the organisation.

Finally, while it is important to have plans in place, it is equally important to test them thoroughly to validate the supporting policies and procedures and ensure system operability. Organisations should implement a CIRP testing strategy that includes key members of the response process, including core team members, organisational stakeholders, and external partners where appropriate.

Without a CIRP, you risk making mistakes during incident response that can exacerbate the unfolding crisis. Even if you have a CIRP, how much could your response capabilities be improved by adopting XDR?

Whether starting from scratch or needing to review and refine an existing CIRP to include XDR, it can be hard to know where to start.

That’s where existing templates can help – but solutions like Cisco XDR can also simplify the incident response planning process by providing a series of default actions and processes to follow that are already embedded in the tool responsible for detecting threats. It’s important to note that this approach won’t build you a comprehensive, overarching CIRP, but it does provide an easier, low-level starting point for developing it. More importantly, it gives you something concrete to work with quickly, while you take the time to add additional details and customise the plan to meet your organisation’s specific requirements.

Data#3 has one of Australia’s most mature and highly accredited security teams. Working in partnership with Cisco, we have been helping our customers achieve a more connected and secure organisation for more than 25 years. We can assist you in building your CIRP to test and refine existing response plans or with advice on simplifying and strengthening your security environment.

To learn more about these services and Data#3’s approach to incident response planning, contact us today for a consultation or to discuss a free trial of Cisco XDR.

Cyber security is a field rife with challenges, a dynamic landscape where IT teams continuously strive to outpace malicious adversaries. However, new strategies and innovations are continually emerging to safeguard users, applications, infrastructure, and digital assets of organisations, regardless of their location.

In our recent blog, we explored the idea of security tool consolidation as a path to simplifying environments that seem to be increasing exponentially in complexity. Now, we’re narrowing our focus to a particularly challenging area for security teams – breach detection and response. The ability to detect a breach quickly and respond in time to stop or reduce the impact of an attack is critical. An IBM report in 2022 calculated the average time to detect and contain a cyber attack is 287 days. There are other stats and averages quoted through various surveys, but the reality is that too many breaches go undetected – or are detected too late for any kind of proactive defence.

The complexity and evasion tactics of cyber attackers are at the heart of missed breaches. Attack methodologies continually evolve in sophistication, designed to bypass traditional defences unnoticed, like ghosts in the night. Monitoring tools help, but despite advancements, alert management remains a delicate balancing act to minimise false positives (and negatives). Environmental complexity also impacts this, with forty-three percent of respondents of an RSA conference survey saying their number one challenge in threat detection and remediation is an overabundance of tools so alerts just aren’t seen.

If that’s not enough to worry about, then the number of possible attack vectors, each with streams of data that need to be correlated to gain a picture of what is actually happening, takes the challenge to a whole new level.

Another factor in the difficulty of detecting breaches is the way the breach is executed. In our experience, compromised user credentials remain a significant cause of undetected cyber security breaches, often stemming from human error, misuse of privileges, social engineering attacks, and, crucially, stolen credentials. The Notifiable Data Breaches Report from July to December 2023, published by the Office of the Australian Information Commissioner (OAIC), records that a significant proportion of data breaches resulted from cyber security incidents where compromised or stolen credentials were involved. Further substantiation comes from the 2024 Verizon Data Breach Investigations Report (DBIR), which continues to spotlight credential compromise as a dominant threat in the cyber security landscape.

In these scenarios, once an attacker gains access, they are careful to avoid behaviour that would trigger an abnormal activity alert until they’re ready to quickly exfiltrate data and get out before any response can stop them. Defending against this requires a high level of cyber security maturity and resources such as a Security Operations Centre (SOC) to configure and operate the right tools.

A glaring skills shortage makes this level of maturity difficult to achieve. Without experienced cyber security experts, gaps in the configuration of defensive measures go un-rectified, and the knowledge needed to interpret alerts and understand what is happening at different points in time are gaps that attackers can easily exploit.

Constructing a totally impregnable defence might be impossible, but there are still ways to improve your capabilities, such as:

In discussions with customers, our teams have noticed a growing perception that AI will solve all their cyber security problems. In sporting parlance, it’s viewed as a “Hail Mary pass” – an all-or-nothing attempt to win.

While AI is still in relative infancy in a security context, there’s little doubt it offers the potential to better automate complex and data-intensive tasks with the granularity required to manage subtle differences in breach events. It can also help less experienced security teams unravel the overwhelming volume of threat indicators needed to better predict attackers’ movements. However, it’s not an instant panacea.

Like any new tool, it can’t transform a poorly designed and managed environment. For example, if you’re not currently patching all your infrastructure consistently, AI won’t solve all your problems.

AI’s efficacy is also tethered to data – vast oceans of it, raising flags around privacy. The integrity and confidentiality of sensitive information become key concerns as AI models feast upon data to hone their predictive prowess. Despite its acumen, AI is not immune to the false positives and negatives that currently frustrate security teams.

Moreover, as security teams use AI more, so do adversaries, leading to an AI arms race. It’s a high-stakes game of innovation and counter-innovation, where staying ahead requires constant vigilance and adaptation. Maintenance and calibration become critical in keeping AI defences effective. Without regular updates and tuning, its effectiveness can rapidly diminish.

While Extended Detection and Response tools have been around for a while, recent advances in capabilities, such as Cisco XDR’s integration with newly acquired Splunk ES, have given them new momentum. They address many of the threat detection challenges outlined above by leveraging the power of integration, analytics, and automation.

By amalgamating data from various sources—be it network devices, cloud environments, endpoints, or email systems—Cisco XDR provides security teams with a much-needed comprehensive view of their infrastructure. This centralisation of detection and response capabilities can help address the challenge of shadow IT and scattered defences by making it harder for that activity to ‘fly under the radar’. Coupled with advanced analytics and machine learning, the platform identifies and isolates anomalous behaviour, cutting through the noise of false positives and focussing on genuine threats.

Automation in XDR not only accelerates the resolution of threats, but also streamlines the management of security alerts, alleviating the burden on security teams and combating alert fatigue.

Finally, the platform’s integration capabilities ensure that an organisation’s plethora of security tools can operate in concert rather than in isolation. This unity enhances the overall efficacy of defence mechanisms and simplifies the security management landscape.

Despite these advancements, resourcing challenges can still hinder organisations, and this is where outsourcing arrangements are growing in popularity.

Today’s outsourcing offerings are more nuanced. While a fully managed SOC is still a possibility, specific capabilities such as managed SASE, SD-WAN or XDR can be chosen individually or collectively from a menu of options. This hybrid approach offers a middle ground, complementing existing in-house resources with access to higher levels of expertise. It’s a partnership where control and collaboration merge to form increasing levels of cyber security maturity.

To learn more about how Data#3 can enhance your cyber security capabilities, contact us today for a consultation or to discuss a free trial. Discover how we can help you stay ahead of cyber threats and protect your digital assets with the latest in breach detection and response technology.

JuiceIT 2024 Guest Blog – The convergence of IT infrastructure, AI, and sustainability presents a complex challenge for organisations. Data centres, the backbone of modern IT, are significant energy consumers. Moreover, the rapidly increasing field of AI is further driving up energy demands. This convergence of factors necessitates innovative solutions to minimise environmental impact. In this quest for sustainability, Data Centre Infrastructure Management (DCIM) emerges as a critical tool.

DCIM offers a holistic view of critical infrastructure operations, enabling organisations to optimise resource utilisation, reduce energy consumption, and minimise waste. Its role in supporting sustainability challenges is multifaceted.

Energy Efficiency:

Resource Optimisation:

AI is a game-changer for making IT infrastructure more sustainable. By analysing vast amounts of data, AI can optimise resource utilisation, predict failures, and streamline operations, leading to significant reductions in energy consumption and waste. By implementing AI-driven strategies, IT departments can significantly lower their carbon footprint while improving operational efficiency and cost-effectiveness.

While Data Centre Infrastructure Management is a powerful tool, several challenges must be addressed to fully realise its sustainability potential:

To overcome these challenges, organisations should prioritise data quality, invest in training, and adopt a phased approach to Data Centre Infrastructure Management implementation.

Achieving sustainability in IT infrastructure and AI requires a collaborative effort. By leveraging Data Centre Infrastructure Management, organisations can significantly reduce their environmental impact. Additionally, partnerships with technology providers, industry associations, and governments can accelerate progress towards a sustainable future.

DCIM is a crucial component of a comprehensive sustainability strategy. By embracing DCIM and integrating it with AI, organisations can create more efficient, resilient, and environmentally responsible IT infrastructures.

Schneider Electric’s award-winning DCIM solution is EcoStruxure IT. This vendor-neutral portfolio offers automated sustainability reporting features that traditionally required deep technical understanding. Unlike anything available on the market, the new model offers customers a fast, intuitive, and simple-to-use reporting engine to help meet regulatory requirements, including the European Energy Efficiency Directive. Please visit our EcoStruxure IT web pages for more information.